[Paper Review] NeRF : Representing Scenes as Neural Radiance Fields for View Synthesis (ECCV2020)

NeRF 모델은 많은 블로그와 유튜브 자료를 찾아보며 이해하는 수준에 그쳤는데 논문을 정독하니 훨씬 더 이해 정도가 깊어진 기분이다. 직접 글을 써보며 완벽히 내 것으로 만들자! 다음과 같은

dusruddl2.tistory.com

↑ 논문 리뷰를 했었는데

정말 내가 NeRF 모델을 제대로 이해하고 있나? 의문이 들어서 쓰게 된 포스트

헷갈렸던 부분 위주로 간단 리뷰할 예정이다.

nerf/tiny_nerf.ipynb at master · bmild/nerf

Code release for NeRF (Neural Radiance Fields). Contribute to bmild/nerf development by creating an account on GitHub.

github.com

코드는 NeRF 코드 중에서 tiny_nerf.ipynb를 참고하였다.

NeRF

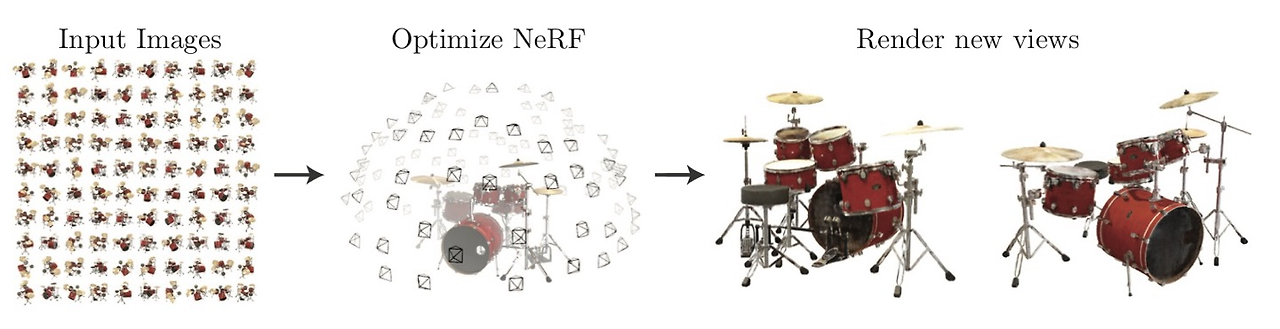

NeRF모델은 여러 뷰에서 촬영한 이미지들을 input으로 받고

이를 통해 가상의 3D 공간을 만드는 모델이다.

여기서 가상의 3D 공간이라고 표현을 한 이유는,

point cloud, voxel, mesh와 같이 실제로 존재하는 3D 공간과 달리

NeRF모델에서는 임의의 시점에서 찍은 2D image를 렌더링할 수 있다면 3D 공간을 생성했다라고 정의하기 때문이다.

(위의 그림 optimize NeRF에서 드럼이 반투명으로 표시된 이유가 실제로 존재하지 않기 때문에 그렇다)

예를 들어 설명하면,

A, B, C 방향으로 물체를 바라본 이미지들을 input으로 넣어서

NeRF를 학습시키면

그 어떠한 새로운 방향(D,E,F,...)에서도 원하는 이미지도 얻어낼 수 있다!

우리는 이것을 3D 공간을 생성했다고 말한다. (explicit 방법과 달리 실제로 존재하는게 x)

NeRF Overview

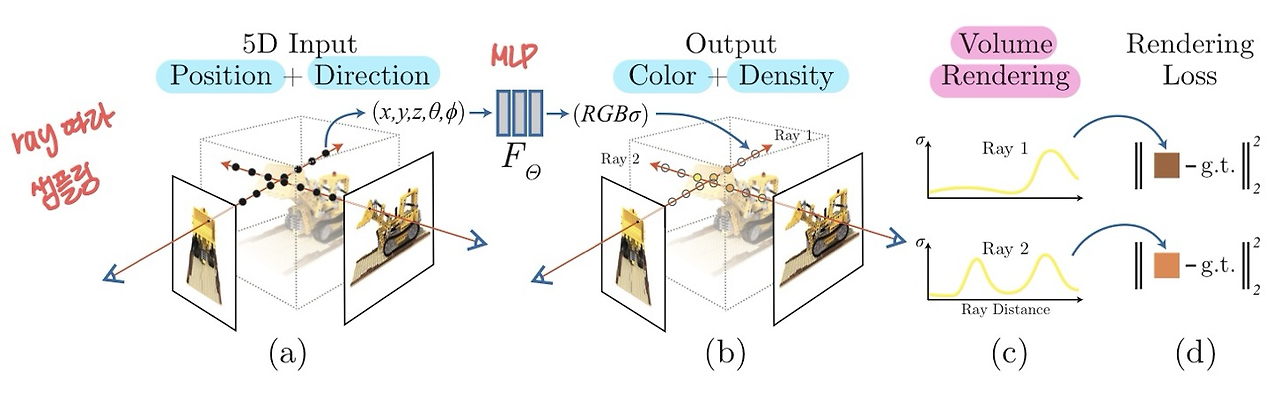

(a)

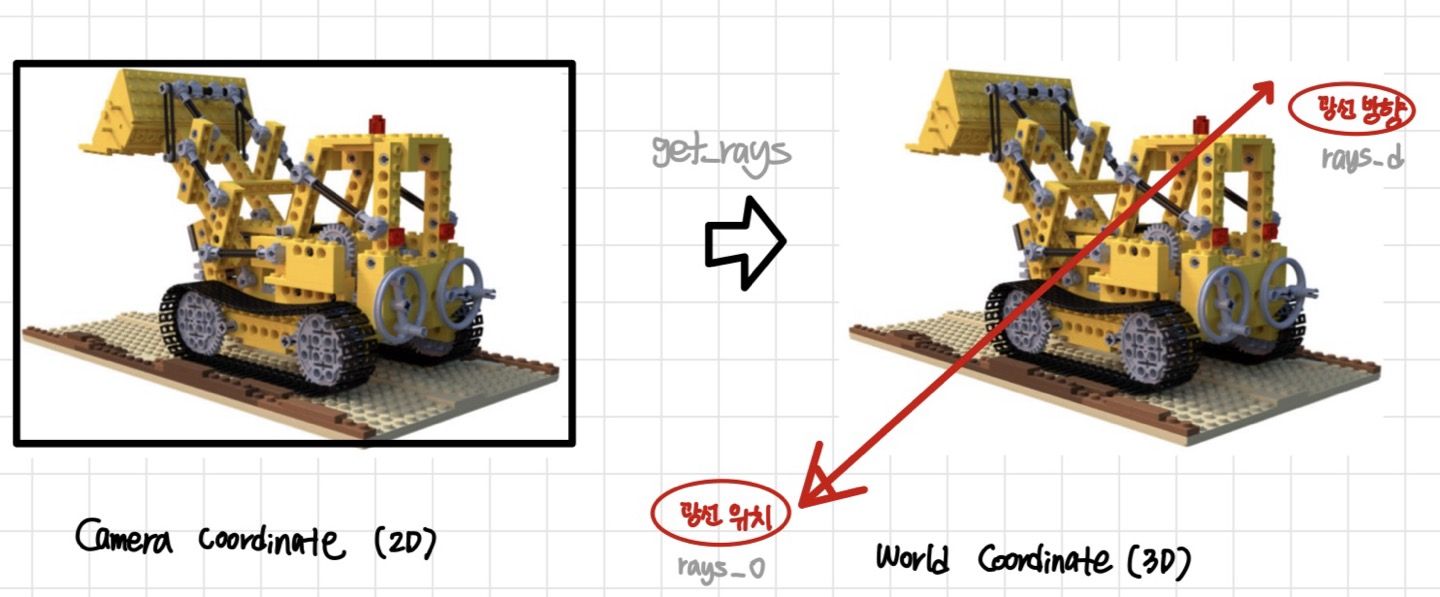

input image가 물체를 어느 방향에서 보고 있는지 (Direction) 구한다. (def get_rays)

(이 과정은 이미지와 카메라 파라미터인 포즈값을 함께 이용한다. -- 둘다 처음에 주어짐)

방향정보를 구했다는건 카메라 좌표계(2D)에서 실제공간(3D)로 이동했다는 것을 의미하고,

위의 그림처럼 광선의 위치와 광선의 방향을 알아냈다고 이해할 수 있다.

(get_rays, rays_o, rays_d는 코드에서 나옴)

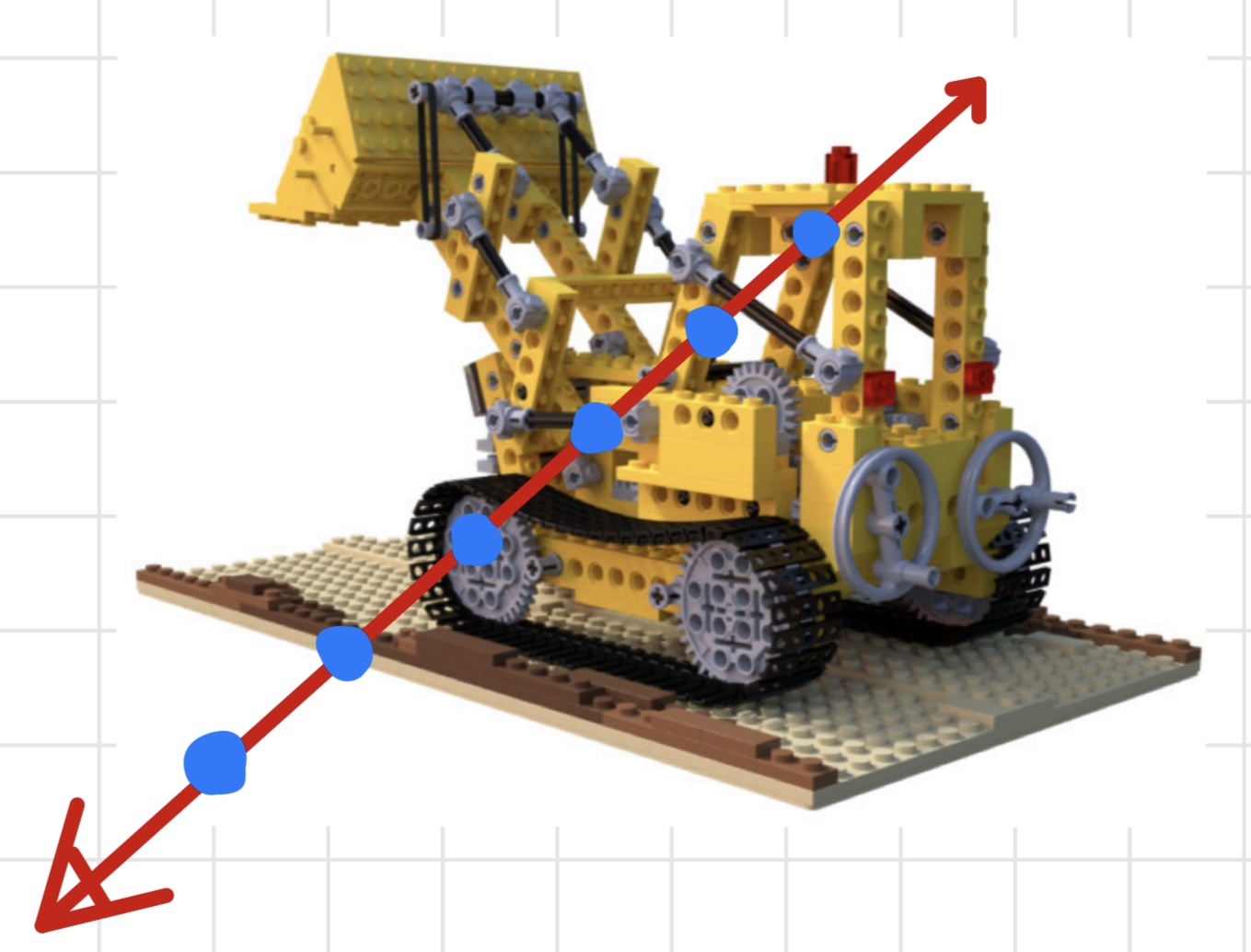

3D 공간으로 옮겨지면, 이제 광선의 위치를 기준으로 N개의 점들을 sampling한다.

그리고 모든 점들에 대해 각각 (x,y,z)로 어떻게 표현하는지, 위치 정보(Position)을 구한다.

(b)

(a)에서 구한 Direction과 Position을

MLP를 통과시켜 Color(RGB)와 밀도값(Density)을 구한다.

(c)

우리는 (b)에서 구한 Color와 Density값이 input image를 잘 표현했는지 확인해봐야 한다.

따라서, Color와 Density를 이용해 2D image를 만들어내는 과정인 Volume Rendering을 진행한다.

(★헷갈렸던 부분: volume rendering이 무슨 역할을 하는지 ★)

위의 overview (a)에서 input image 사진이 나오다 보니까

이를 MLP에 넣어 학습했다고 잠시 혼란이 왔었다.

그게 아니라 우리는 input image의 방향정보만을 알고 시작한다.

이후에 샘플링을 통해 ray위 점들의 위치정보를 구하고

이를 MLP에 통과시켜 컬러값과 밀도값을 구한 것이다.

그러니까 GT(Ground Truth) 이미지는 input image가 되는 것이고

우리는 MLP를 통해 구한 컬러값과 밀도값을 이용해 2D image를 만들어

둘을 비교하는 방향으로 MLP의 weight를 업데이트하는 것이다.

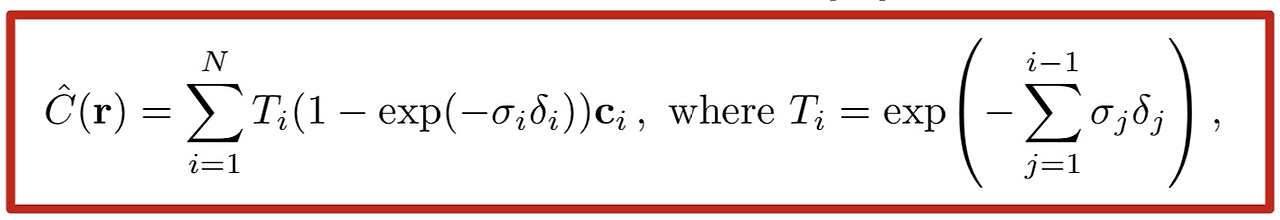

Volume Rendering은 아래 수식을 통해

각 ray에 있는 sampling된 점들의 color값과 density값을 누적합하여 하나의 color값을 구하는 것이다.

(수식의 자세한 설명은 논문리뷰 참고)

(★헷갈렸던 부분: 위의 수식에서는 color값이 하나만 나오는데 이걸로 어떻게 2D 이미지를 만들어? ★)

(a)와 (b)의 단계는 다 픽셀 단위로 이루어진다.

따라서, 각각의 픽셀에 대해서 volume rendering을 통해 최종 color값을 구하게 되는 것이고

이를 다 모으면 하나의 2D 이미지가 되는 것이다.

(코드에서 ray_o와 ray_d의 shape을 통해 이를 증명할 수 있다.)

(d)

GT이미지와

Volume Rendering된 2D 이미지 사이의 loss값을 구하고

이를 줄이는 방향으로 weight를 업데이트한다.

코드로 이해하기

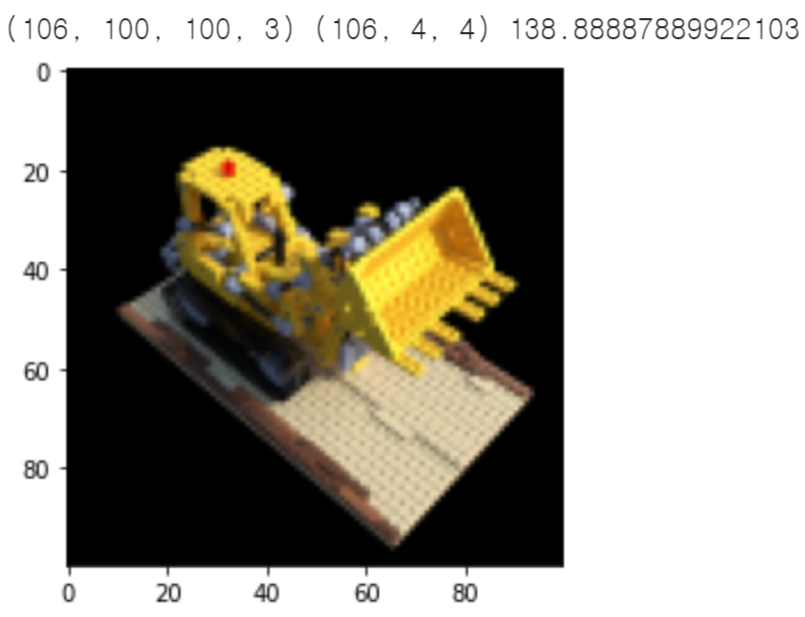

Load Input Images and Poses

data = np.load('tiny_nerf_data.npz')

images = data['images']

poses = data['poses']

focal = data['focal']

H, W = images.shape[1:3]

print(images.shape, poses.shape, focal)

testimg, testpose = images[101], poses[101]

images = images[:100,...,:3]

poses = poses[:100]

plt.imshow(testimg)

plt.show()

input image들 중 하나인 위의 이미지를 기준으로 설명하겠다.

이미지의 차원(shape): B = 106, H = 100, W = 100, C = 3

카메라 파라미터 pose의 차원(shape): B = 106, 4x4 변환 행렬

> 변환행렬은 카메라의 위치와 방향을 정의

(a)

def get_rays(H, W, focal, c2w):

i, j = tf.meshgrid(tf.range(W, dtype=tf.float32), tf.range(H, dtype=tf.float32), indexing='xy')

dirs = tf.stack([(i-W*.5)/focal, -(j-H*.5)/focal, -tf.ones_like(i)], -1)

rays_d = tf.reduce_sum(dirs[..., np.newaxis, :] * c2w[:3,:3], -1)

rays_o = tf.broadcast_to(c2w[:3,-1], tf.shape(rays_d))

return rays_o, rays_d함수 get_rays는 이미지를 통해 Direction를 얻는 코드이다.

+ 트럭 이미지를 get_rays에 통과시킨 결과의 shape을 생각해보면,

rays_o, rays_d = get_rays(H,W,focal,pose)rays_o의 차원(shape): (106, H, W, 3)

rays_d의 차원(shape): (106, H, W, 3)

>> RGB 각각에 대해서는 (106, H, W)이 동일할 것

이는 모두 픽셀 단위로 이루어진다는 것을 확인해볼 수 있다.

(a) & (b) & (c)

def render_rays(network_fn, rays_o, rays_d, near, far, N_samples, rand=False):

def batchify(fn, chunk=1024*32):

return lambda inputs : tf.concat([fn(inputs[i:i+chunk]) for i in range(0, inputs.shape[0], chunk)], 0)

#-----------(a) 샘플링을 통해 Position 구하기

# Compute 3D query points

z_vals = tf.linspace(near, far, N_samples) # N_samples개의 점들을 샘플링

if rand:

z_vals += tf.random.uniform(list(rays_o.shape[:-1]) + [N_samples]) * (far-near)/N_samples

pts = rays_o[...,None,:] + rays_d[...,None,:] * z_vals[...,:,None] #각 샘플 포인트의 위치정보를 얻음

#-----------(b) MLP를 거쳐 Color & Density 구하기

# Run network

pts_flat = tf.reshape(pts, [-1,3])

pts_flat = embed_fn(pts_flat)

raw = batchify(network_fn)(pts_flat)

raw = tf.reshape(raw, list(pts.shape[:-1]) + [4])

# Compute opacities and colors

sigma_a = tf.nn.relu(raw[...,3])

rgb = tf.math.sigmoid(raw[...,:3])

#-----------(c) 볼륨렌더링 Volume Rendering

# Do volume rendering

dists = tf.concat([z_vals[..., 1:] - z_vals[..., :-1], tf.broadcast_to([1e10], z_vals[...,:1].shape)], -1)

alpha = 1.-tf.exp(-sigma_a * dists)

weights = alpha * tf.math.cumprod(1.-alpha + 1e-10, -1, exclusive=True)

rgb_map = tf.reduce_sum(weights[...,None] * rgb, -2)

depth_map = tf.reduce_sum(weights * z_vals, -1)

acc_map = tf.reduce_sum(weights, -1)

return rgb_map, depth_map, acc_map함수 render_rays에는

(a)에서의 Position을 구하는 과정과

(b)에서의 Color & Density를 구하는 과정과

(c) 볼륨렌더링(Volume Rendering)이 모두 포함되어 있다.

(d)

model = init_model()

optimizer = tf.keras.optimizers.Adam(5e-4)

N_samples = 64

N_iters = 1000

psnrs = []

iternums = []

i_plot = 25

import time

t = time.time()

for i in range(N_iters+1):

img_i = np.random.randint(images.shape[0])

target = images[img_i]

pose = poses[img_i]

rays_o, rays_d = get_rays(H, W, focal, pose)

#-----------(d) loss를 구해 gradient 업데이트

with tf.GradientTape() as tape:

rgb, depth, acc = render_rays(model, rays_o, rays_d, near=2., far=6., N_samples=N_samples, rand=True)

loss = tf.reduce_mean(tf.square(rgb - target))

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

if i%i_plot==0:

print(i, (time.time() - t) / i_plot, 'secs per iter')

t = time.time()

# Render the holdout view for logging

rays_o, rays_d = get_rays(H, W, focal, testpose)

rgb, depth, acc = render_rays(model, rays_o, rays_d, near=2., far=6., N_samples=N_samples)

loss = tf.reduce_mean(tf.square(rgb - testimg))

psnr = -10. * tf.math.log(loss) / tf.math.log(10.)

psnrs.append(psnr.numpy())

iternums.append(i)

plt.figure(figsize=(10,4))

plt.subplot(121)

plt.imshow(rgb)

plt.title(f'Iteration: {i}')

plt.subplot(122)

plt.plot(iternums, psnrs)

plt.title('PSNR')

plt.show()

print('Done')

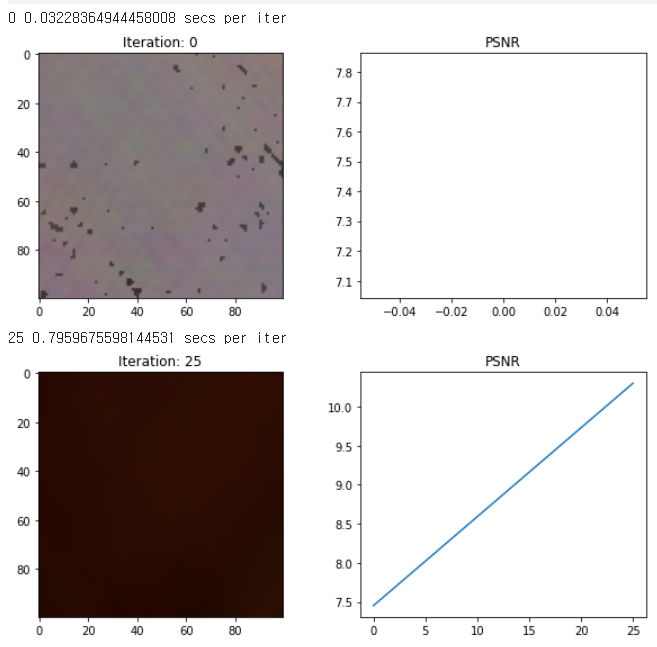

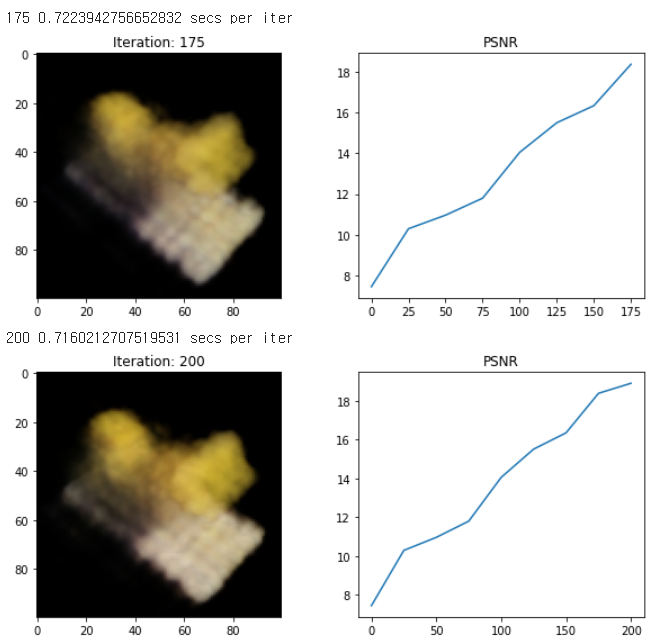

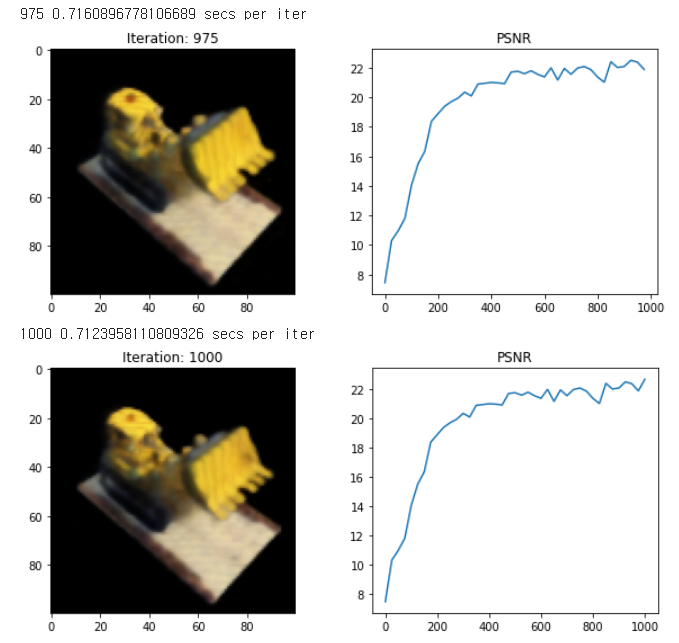

위의 코드를 통해 epoch를 25 간격으로 결과를 계속 출력해보면

아래 사진과 같이 훈련을 더 많이 함에 따라 퀄리티가 좋아짐을 확인할 수 있다.

(학습 과정 중 일부 발췌)

물론 이 글을 쓰며 참고한 코드는 tiny_nerf.ipynb로

최종 nerf모델과는 일부 차이가 있지만

그래도 헷갈렸던 부분을 이해하는데는 큰 도움이 되었다 :)

(2024.05.12 작성)

'📚 Study > Paper Review' 카테고리의 다른 글

| 3DGS에서 Covariance Matrix를 구할 때 transpose matrix를 곱해주는 이유? (0) | 2024.05.17 |

|---|---|

| 3DGS에서 휴리스틱(heuristic)의 의미? (0) | 2024.05.15 |

| [Paper Review] 3D Gaussian Splatting for Real-Time Radiance Field Rendering (SIGGRAPH 2023) (0) | 2024.05.03 |

| 3DGS의 tile rasterizer에서 겹치는 tile개수에 따라 인스턴스화하는 이유? (0) | 2024.05.03 |

| 3DGS에서 뷰 절두체 view frustum이란? (0) | 2024.04.30 |